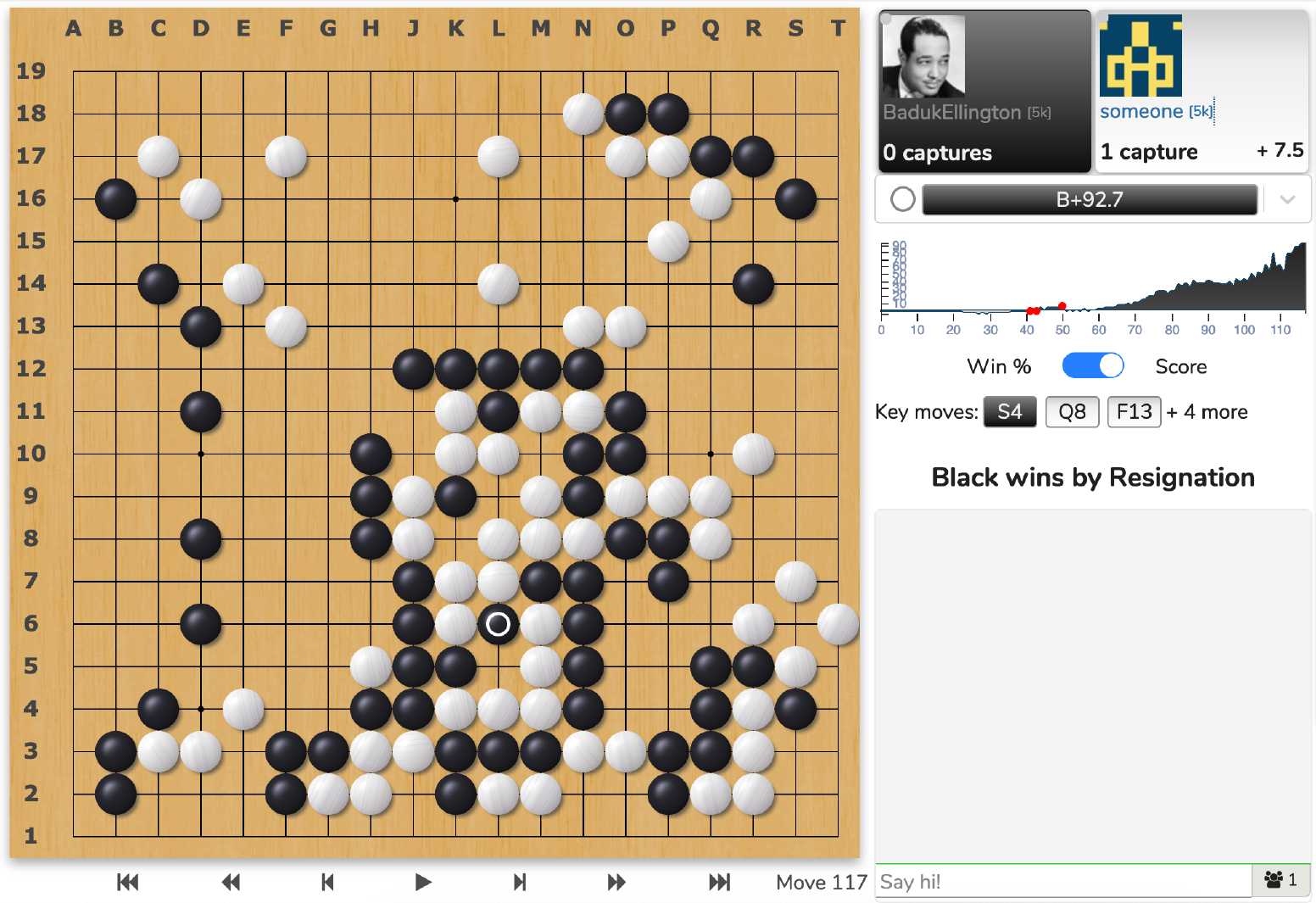

Baduk Ellington (2018)

BadukEllington is my go AI, which you can play on the Online Go Server. At fast settings, it plays at a strong kyu level. At one point, it was among the top 10 most popular bots on OGS! (Maybe it still is; there are more bots on OGS these days though)

Did I mention I wrote an entire book about how this works? (Please buy my book)

Go? What?

Go is the best game ever invented. It’s a pure abstract strategy game (like chess, but better). It originated in China thousands of years ago. It’s widely played throughout East Asia today, with a small but dedicated following elsewhere around the world.

Go was a notoriously difficult game for computers, but in 2016, the AlphaGo AI beat one of the all-time greatest players with a clever combination of deep learning and traditional tree search.

Technical notes

My goals were:

- keep the code simple

- use only the hardware I physically had in my house (a small server with a 1080 Ti GPU)

- make a bot that’s fun for amateurs to play.

With that in mind…

It uses an AlphaGo Zero-style search algorithm. I really like elegance of the AGZ’s tree search, and I wanted to keep the code as simple as possible.

It’s bootstrapped from human games. As much as I admire AGZ, I certainly did not have the kind of hardware resources to do millions of games of self-play. So I had to use human games to quickly get the network up to a playable level.

It supports no-komi and reverse-komi games (but not variable komi). Instead of assuming white always gets the komi, I added two feature planes, one of which means “I get komi”, the other indicating “my opponent gets komi”. And you can jus zero them both out for no-komi. In training, I pretended 6.5 and 7.5 komi were the same thing; but obviously the difference could matter in a close game. “Weird komi” games were excluded from the training set.

It supports handicap play. There were plenty of handicap games in the training set, which is fine for training the policy head. To train the value head, I applied discounting to the game outcomes. So the opening of every game is considered to be 50/50 for training purposes; the final position of the game uses the correct game outcome; and there’s a smooth decay in between. This prevents the bot from resigning on move 2 of a handicap game.

The code is available on GitHub. Warning: there’s lot of “dead code” in there (various experiments that didn’t work out for whatever reason).

Active learning

I cooked up a scheme for active learning using the games it plays online against humans. However, it’s hard to tell if it works well. There are a few possibilities:

- My scheme works, but it only speeds up learning by like 2x (whereas I would need like a 20x speedup to see results on a reasonable timescale)

- My scheme doesn’t work, and it’s no better than plain old AGZ-style self-play

- My scheme would work, and drastically accelerate learning, but there’s a subtle bug in the training code somewhere

Unfortunately, it’s hard to tell with the amount of compute I have available. I’m still pretty curious about this area, so I may pick this back up again some day.

Gameplay notes

Most of the time, the bot plays pretty strong moves, maybe at a low- to mid-dan level. But it has blind spots around certain tactical situations, like ladders and semeai. This is because the tree search runs at a very low number of playouts. So even if you’re hopelessly behind in a game, you can often trick it into blundering the game away.

I keep the number of playouts low because:

- I’m running it on a server that’s physically in my house, using electricity that I pay for

- I wanted the bot to play quickly

- I wanted the bot to handle multiple games at once

- I wanted to keep my GPU free for my own purposes

I have a few ideas to improve its play in these situations – maybe I’ll find some time to experiment with them some day!

What’s up with the weird name?

“Go” is the Japanese name for the game. In Korea the game is called “baduk” (바둑).

Edward Kennedy “Duke” Ellington was a legendary jazz pianist and bandleader from the early 20th century.

To explain the joke, you must kill the joke.

Duke Ellington facts

- Like all great humans, Duke Ellington was born in DC.

- Duke Ellington’s piano teacher was Mrs. Clinkscales.

- My first CD was compilation of Duke Ellington’s greatest hits.